AI and machine learning are revolutionizing automated analytics—fueling smarter decisions across industries. But with this power comes a pervasive challenge: the AI black box.

These complex models can produce highly accurate predictions and invaluable insights, yet often without clear, human-understandable explanations for how they arrived at those conclusions. This lack of transparency can erode trust, mask inherent biases, and significantly hinder effective decision-making and problem-solving.

This article delves into the critical need for understanding AI black box models in automated analytics, explores the latest trends in Explainable AI (XAI), and provides actionable strategies for building trust and transparency in your AI deployments.

The Challenge of the AI Black Box in Analytics

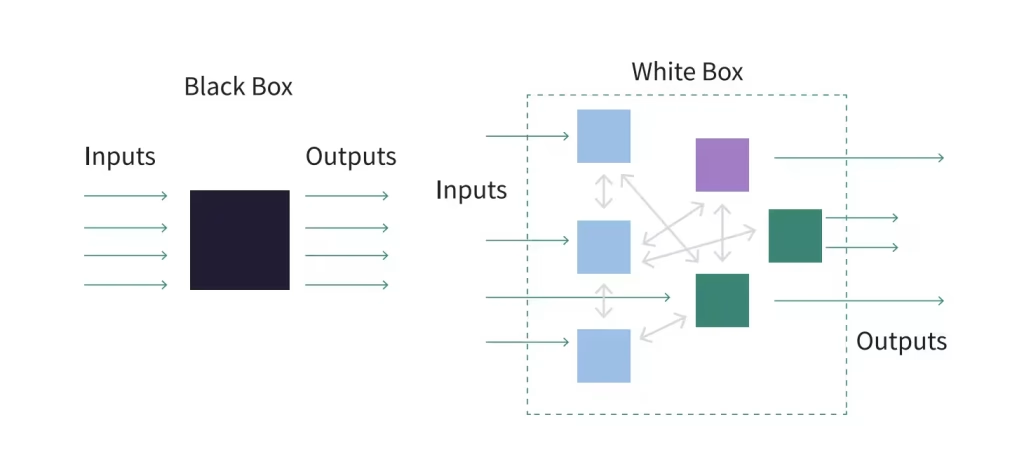

The “AI black box” refers to AI systems whose internal workings are so intricate that even their developers struggle to understand the precise reasoning behind their outputs. Imagine an AI system flagging a significant anomaly in your financial data, recommending an immediate audit. While the anomaly detection might be accurate, without understanding why the AI made that specific flag, you’re left with a trust deficit. Was it a genuine threat, or a subtle, unnoticed bias in the training data? This lack of clarity is the essence of the AI black box problem.

Key challenges posed by the AI black box in automated analytics include:

- Reduced Trust and Adoption: If users don’t understand how an AI system makes decisions, they are less likely to trust its outputs, leading to lower adoption rates and underutilization of valuable insights.

- Difficulty in Debugging and Optimization: When an AI model produces an incorrect or undesirable outcome, diagnosing the root cause becomes incredibly difficult without visibility into its decision-making process. This hinders effective debugging and model refinement.

- Bias and Fairness Concerns: AI models can inadvertently learn and perpetuate biases present in their training data. Without transparency, identifying and mitigating these biases is a significant hurdle, potentially leading to unfair or discriminatory outcomes, especially in high-stakes applications like loan approvals or hiring.

- Regulatory Compliance: As governments worldwide introduce stricter regulations around AI, particularly concerning data privacy, fairness, and accountability (e.g., the EU AI Act), the ability to explain AI decisions becomes a legal and ethical imperative.

- Lack of Knowledge Transfer: When an AI system operates as a black box, it’s challenging to extract valuable insights or “knowledge” from its reasoning to inform other business processes or human decision-making.

The Rise of Explainable AI (XAI): Demystifying the Black Box

The growing recognition of these challenges has fueled rapid advancements in Explainable AI (XAI). XAI aims to make AI models more transparent and interpretable, allowing users to understand why and how decisions are made. This shift from “trustworthy AI” to “transparent AI” is a critical trend for 2025 and beyond.

XAI techniques generally fall into two main categories:

1. Intrinsic Explainability (White Box Models)

These models are inherently interpretable due to their simpler structures, meaning their decision-making process can be easily understood without additional tools. They are often preferred in high-stakes industries where transparency is crucial. Examples include:

- Decision Trees: These models represent decisions in a hierarchical, rule-based structure, making the decision path clear and traceable. For instance, in credit scoring, a decision tree might show how loan approvals are based on factors like income, credit history, and debt-to-income ratio.

- Linear Regression: A statistical method that establishes a direct relationship between input variables and the output. The model assigns weights to each feature, indicating their impact on the outcome.

- Rule-Based Systems: These systems use explicitly defined “if-then” rules to determine outcomes, often used in expert systems where decisions must be traceable.

2. Post-Hoc Explainability (for Black Box Models)

These techniques explain complex, black-box models after they have been trained and deployed. Since models like deep neural networks and ensemble learning methods lack inherent transparency, post-hoc methods help interpret their predictions. Key techniques include:

- Local Interpretable Model-agnostic Explanations (LIME): LIME explains individual predictions of any black-box model by creating a simpler, interpretable model that locally approximates the complex model’s behavior around the specific prediction. It highlights which input features are most influential for that particular outcome.

- SHapley Additive exPlanations (SHAP): Based on cooperative game theory, SHAP assigns each feature an “importance value” for a particular prediction. It considers all possible combinations of features and how they affect the prediction, providing a globally consistent and locally accurate explanation.

- Partial Dependence Plots (PDPs) and Individual Conditional Expectation (ICE) Plots: These visualization techniques show how target predictions depend on a given feature or a set of features, providing insights into the overall behavior of the model.

Building Trust in Automated Analytics: Actionable Strategies

Understanding the “why” behind AI decisions is paramount for fostering trust. Here are actionable strategies to incorporate XAI and build trust in your automated analytics:

1. Prioritize Transparency from Inception

- Define Clear AI Principles: Establish internal guidelines and principles for AI development and deployment that prioritize transparency, fairness, and accountability. Organizations like Microsoft and Google have published their AI principles as examples.

- Communicate Limitations: Be upfront about the limitations of your AI tools. Clearly communicate where AI excels and where human oversight remains necessary to foster collaboration rather than competition between AI and human decision-making.

- Document and Disclose: Thoroughly document the underlying AI algorithm’s logic, the data inputs used for training, and the methods used for model evaluation and validation. This disclosure is crucial for stakeholders to trust the AI’s effectiveness and fairness.

2. Implement Explainable AI Techniques

- Choose Appropriate XAI Methods: Select XAI techniques (intrinsic or post-hoc) based on the complexity of your models and the specific interpretability needs of your use case. For high-stakes applications, inherently interpretable models might be preferred.

- Integrate XAI into MLOps: Embed XAI methods directly into your Machine Learning Operations (MLOps) pipeline. This allows for continuous monitoring of model fairness, accountability, and explainability throughout the AI lifecycle.

- Visualize Explanations: Present AI explanations in user-friendly formats, such as interactive dashboards, decision trees, or feature importance plots, making them accessible to a wider audience, including business users and decision-makers.

3. Foster a Culture of AI Literacy and Collaboration

- Invest in AI Training: Provide comprehensive training for your teams – from data scientists to business analysts and end-users – on how AI models work, the importance of explainability, and how to interpret AI outputs. This empowers employees to understand and effectively leverage AI tools.

- Build Cross-Functional Teams: Encourage collaboration between IT, legal, business teams, and domain experts. This multi-disciplinary approach ensures that AI solutions meet both technical requirements and real-world business needs, while also adhering to ethical and compliance standards.

- Establish Feedback Channels: Create clear and accessible channels for users to provide feedback on their experiences with AI systems. This demonstrates a commitment to continuous improvement and addresses concerns proactively.

4. Ensure Robust Data Governance and Security

- Centralized Governance: Implement centralized governance for AI-driven workflows to proactively address data security and privacy concerns. This includes robust security measures like role-based access controls and legal hold policies. For a comprehensive overview, refer to an AI governance checklist.

- Data Minimization and Quality: Prioritize data minimization, collecting only the data necessary for the AI’s purpose. Furthermore, ensure high data quality, as biases or errors in training data directly impact AI model performance and fairness.

- Transparent Privacy Policies: Craft privacy policies that are genuinely easy to understand, outlining exactly what data is collected, why, and how it’s used within your AI systems. Provide simple opt-out options for users to manage their data preferences.

5. Continuous Monitoring and Auditing

- Regular Performance Evaluation: Continuously monitor and evaluate the performance of your AI systems, not just for accuracy but also for fairness, bias, and adherence to ethical guidelines.

- Auditability: Design AI systems to be auditable, allowing for independent review of their decision-making processes. This is crucial for regulatory compliance and demonstrating accountability.

- Address Mistakes Transparently: When your AI system makes a mistake, be upfront and transparent about it. Open communication, even in challenging situations, builds greater long-term trust than attempting to conceal issues.

The Future is Transparent: Expert Insights and Key Takeaways

The trend towards transparent AI is undeniable. Regulatory bodies, industry leaders, and consumers alike are demanding greater clarity and accountability from AI systems. As noted by the US Defense Advanced Research Projects Agency (DARPA) with its Explainable AI (XAI) program, the goal is to create AI systems whose actions can be more easily understood by human users.

Key Takeaways:

- Explainable AI is not a luxury, but a necessity. For automated analytics to deliver their full potential, trust and understanding are paramount.

- Transparency builds trust, which drives adoption. When users understand the “why,” they are more likely to embrace and effectively utilize AI-driven insights.

- Proactive measures are crucial. Integrating XAI from the design phase, establishing clear governance, and fostering AI literacy will yield the most impactful results.

For more insights into practical applications, explore diverse XAI use cases across industries, from healthcare diagnostics to financial fraud detection.

Embrace Transparency for Enhanced AI Value

Opening the AI black box is not merely a technical endeavor; it’s a strategic imperative for any organization leveraging automated analytics. By embracing Explainable AI, prioritizing transparency, and fostering a culture of trust and understanding, businesses can unlock the true potential of their AI investments. This leads to more reliable insights, fairer outcomes, greater regulatory compliance, and ultimately, a more impactful and trustworthy relationship between humans and machines.

Don’t let your valuable AI insights remain shrouded in mystery. Start your journey towards transparent and trustworthy automated analytics today. Explore XAI solutions, invest in AI literacy for your teams, and build a framework that champions clarity and accountability.

FAQ Section: People Also Ask About AI and Trust

What is the AI black box problem?

The AI black box problem refers to the challenge of understanding how complex AI models, particularly deep learning networks, arrive at their predictions or decisions. Their internal workings are often opaque, making it difficult for humans to trace the reasoning process. This lack of transparency can lead to issues with trust, bias, and debugging.

Why is Explainable AI (XAI) important?

XAI is crucial because it addresses the opacity of AI systems by making their decisions understandable to humans. This fosters trust, helps identify and mitigate biases, aids in debugging and improving models, and ensures compliance with growing regulatory requirements for AI accountability and fairness.

How does XAI help build trust in automated analytics?

XAI builds trust by providing clear, interpretable explanations for AI-driven insights. When users understand the factors and reasoning behind an AI’s output, they are more confident in its recommendations. This transparency encourages greater adoption of automated analytics, allows for human oversight, and facilitates collaboration between human experts and AI systems.

What are some common techniques used in Explainable AI?

Common XAI techniques include:

- Intrinsic Explainability: Using inherently transparent models like Decision Trees and Linear Regression.

- Post-Hoc Explainability: Applying methods to explain complex models after training, such as LIME (Local Interpretable Model-agnostic Explanations), SHAP (SHapley Additive exPlanations), and Partial Dependence Plots (PDPs).

What are the main benefits of transparent AI in business?

The main benefits of transparent AI in business include:

- Increased user trust and adoption of AI systems.

- Improved ability to debug and optimize AI models.

- Better identification and mitigation of algorithmic bias.

- Enhanced compliance with AI regulations and ethical guidelines.

- Facilitated knowledge transfer from AI insights to human decision-making.